Emails that show what Meta really thought about Molly Russell

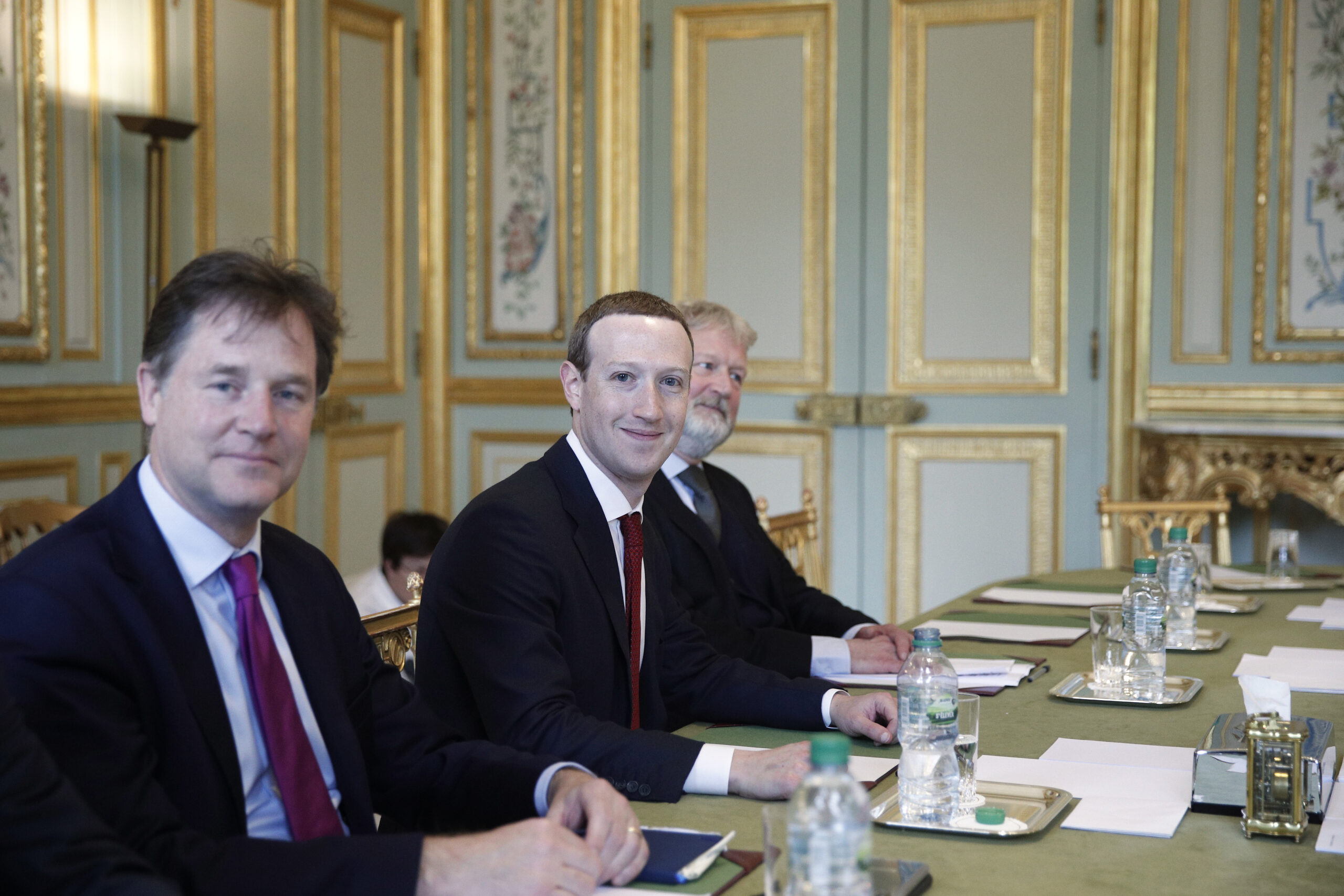

Meta executives including Sir Nick Clegg privately criticised its policy of leaving up images of self-harm, even though at the time — after the death of the British schoolgirl Molly Russell — they were publicly claiming such material was safe for teenagers to post.

Internal emails, cited in a lawsuit filed against Meta, which owns Facebook and Instagram, show that Clegg said the company’s stance appeared “convoluted and evasive”.

In another email, Nicola Mendelsohn, a vice-president, said the self-harm content allowed on Instagram was “truly horrific”. She also contradicted a claim made by other executives including Clegg that experts supported the decision to leave some self-harm content on the app.

Molly, from Harrow in northwest London, had viewed more than 2,000 images portraying self-harm, suicide and depression on Instagram in the months before she took her own life in November 2017 at the age of 14.

Meta, then known as Facebook, was heavily criticised in January 2019 after Molly’s father, Ian, first spoke publicly about her death.

Since then, Meta’s handling of youth safety has come under global scrutiny. The lawsuit brought by a group of US states alleges that Meta put profit before protecting young users and that it has “repeatedly misled the public about the substantial dangers of its social media platforms”.

At the inquest into Molly’s death in September 2022, Andrew Walker, the coroner, concluded that she died from an act of self-harm while suffering depression and the negative effects of online content.

In the aftermath of her death, Meta defended allowing some of these images on Instagram with the claim that they could help young people.

During an interview with the BBC in January 2019, Clegg said: “At the moment, they [experts] have said that even some of these distressing images are better to keep if it helps young people reach out for help.”

But internal emails recently unsealed by the court in California reveal that the former deputy prime minister wrote to Mark Zuckerberg, the chief executive, Sheryl Sandberg, the chief operating officer, and Adam Mosseri, the head of Instagram, to say: “Our present policies and public stance on teenage self-harm and suicide are so difficult to explain publicly that our current response looks convoluted and evasive.”

Then, in February 2019, Mendelsohn emailed Clegg and Sandberg, saying: “No experts will come out and speak on our behalf around the fact that we leave things up so that we can help people with self-harm tendencies … I would urge us to think again about why we are allowing this imagery to stay up — it is truly horrific.”

Clegg, 57, whose salary is reported to be £2.7 million, with a bonus worth almost £10 million in 2022, joined Meta in 2018 and was promoted to president for global affairs in 2022.

According to the court filing, an internal document said in 2019 that there was a palpable risk of “similar incidents” to Russell’s death because Instagram’s algorithms were “leading users to distressing content”. The filing also alleges that some Meta executives believed that Instagram was “unhealthy” for young users, quoting an email from Karina Newton, then Instagram’s head of policy, in 2021.

The filing includes a series of other allegations, including that the company suppressed internal data about harmful content on its platforms.

The document quotes an internal Meta survey that found that during 2021, 8.4 per cent of users aged between 13 and 15 had seen content relating to self-harm on Instagram within the previous seven days.

When Meta claimed that research into social media’s impact on mental health was inconclusive, an unnamed employee is alleged to have described this as akin to tobacco companies denying that cigarettes caused cancer, particularly as Meta’s own studies had shown a negative effect.

Meta said: “We routinely make decisions to help keep people, particularly teens, safe on our apps,” adding that removing sensitive content could affect engagement “but we do it anyway to protect our community”.

Molly had saved posts including one from an account called “Feeling Worthless” that said “All I want to do is close my eyes and never open them again”.

• Teens are still being shown suicide posts

At Molly’s inquest, Elizabeth Lagone, Facebook’s head of youth wellbeing policy, repeatedly defended the posting of such images on Instagram, saying that they were “people sharing very difficult feelings”. Testifying under oath, Lagone claimed that such images were safe for teenagers to see, saying: “I think it’s important that people have a voice and can express themselves.”

She emphasised that Facebook worked “extensively with suicide and self-injury and youth safety experts in the development of our policies”.

Ian Russell, who set up a suicide prevention charity, the Molly Rose Foundation, in his daughter’s memory, said there was a gap “between the pronouncements that the company makes publicly and what they say privately”.

He added: “When Lagone was questioned ‘Do you think this post is safe?’, she repeatedly answered ‘I do not know’. That hard, defensive way that Meta chose to contribute to the inquest seems very different from the evidence that was recently unsealed. There you can see senior executives within Meta having a debate behind closed doors that was very different.”

Other recently released Facebook documents from a court case in New Mexico allege that in a 2021 document staff raised concerns that one of the firm’s algorithms was connecting young people with potential paedophiles.

This follows the publication in September 2021 of a major investigation by the Wall Street Journal that alleged that the company knows Instagram is “toxic” for teenage girls, and exposed weaknesses in its responses to human trafficking and drug cartels. The investigation was based on internal documents leaked by whistleblower Frances Haugen.

Earlier this month, Meta said that it would introduce measures in the coming weeks to hide content about suicide, self-harm and eating disorders from children.

Russell added: “We welcome every step taken towards digital safety. However, as [the Molly Rose Foundation’s] recent research has shown, harmful content such as Molly saw over six years ago is still readily found online, and it is being recommended to young users by the platforms’ algorithms — despite a raft of new safety measures that have been introduced. So we will remain unconvinced about the effectiveness of these new measures until there is a demonstrable improvement that clearly protects children on Instagram.”

Meta said that the US lawsuit mischaracterised its work “using selective quotes and cherry-picked documents”. The company added: “We routinely make decisions to help keep people, particularly teens, safe on our apps.”

Post Comment