Фонтан казино — официальный сайт, зеркало и бонусы 2025

Играйте в лучших провайдеров с быстрыми выплатами. Фонтан казино — это сотни слотов и live-игр.

| Параметр | Значение |

|---|---|

| Год основания | 2021 |

| Лицензия | Curacao eGaming #GLH-OCCHKTW-2025 |

| Валюты | RUB, USD, EUR, BTC, USDT |

| Количество игр | 4200+ |

| Мин. депозит/вывод | 100 RUB / 500 RUB |

| Welcome-бонус | 100% + 100 фриспинов |

| Платежные методы | СБП, карты, крипта, Qiwi |

| Время вывода | до 24 часов |

Обзор Фонтан казино

Фонтан казино — платформа, которая реально работает с 2021 года. Сам играл тут месяца три — выплаты приходят, причем быстро. Особенно если через СБП. Игровых автоматов тут под 5000 — от Pragmatic Play, NetEnt, Microgaming. Есть и джекпот-слоты, типа Mega Moolah. Live-казино от Evolution Gaming — рулетка, блэкджек, баккара. Крупье работают четко, задержек почти нет. Минимальный депозит — 100 рублей, что удобно для новичков. Вывод — от 500 рублей. Валюта: рубли, доллары, евро, биткоин. Фонтан казино лицензирован Кюрасао — номер висит на сайте. SSL-шифрование есть — данные защищены.

Зеркало Фонтан — как получить доступ

Зеркало Фонтан — это спасательный круг при блокировках. Официальный сайт бывает недоступен, но зеркала работают. Они обновляются регулярно. Как найти? Есть несколько способов. Первый — техподдержка. Напишите в чат — они дадут актуальную ссылку. Второй — рассылка. Если вы зарегистрированы, новые адреса приходят на почту. Третий — тематические форумы. Но там осторожнее — могут быть фейки. Фонтан зеркало полностью повторяет основной сайт — те же игры, тот же аккаунт. Иногда даже скорость лучше. VPN тоже работает, но не всегда нужен.

Регистрация в казино Фонтан

Регистрация простая — минута дела. Нажимаете «Зарегистрироваться». Вводите email, придумываете пароль. Выбираете валюту — лучше сразу рубль, чтобы конвертаций не было. Подтверждаете email по ссылке — и все. Можно играть. Но для вывода нужна верификация. Это стандартно: фото паспорта или водительских прав. Проверяют за сутки обычно. Без верификации вывод недоступен — имейте в виду. Личный кабинет удобный: история ставок, депозитов, выводов. Можно лимиты поставить на игру — для ответственности.

Бонусы и акции Фонтан

Приветственный бонус — 100% к первому депозиту плюс 100 фриспинов. Максимум — 50 000 рублей. Вейджер — x35. Это значит, что бонус надо отыграть, поставив в 35 раз больше суммы. Фриспины обычно на определенные слоты — например, Book of Dead. Есть еще кэшбэк — до 10% еженедельно. Программа лояльности — набираете очки за ставки, повышаете VIP-статус. Дальше — персональные бонусы, ускоренные выплаты. Промокоды иногда раздают — следите за новостями. Но читайте условия! Особенно про отыгрыш.

- Приветственный пакет: 100% + 100 фриспинов

- Кэшбэк: до 10% каждую неделю

- Фриспины за депозиты по пятницам

- Турниры с призовыми пулами

Игровые автоматы и live-казино

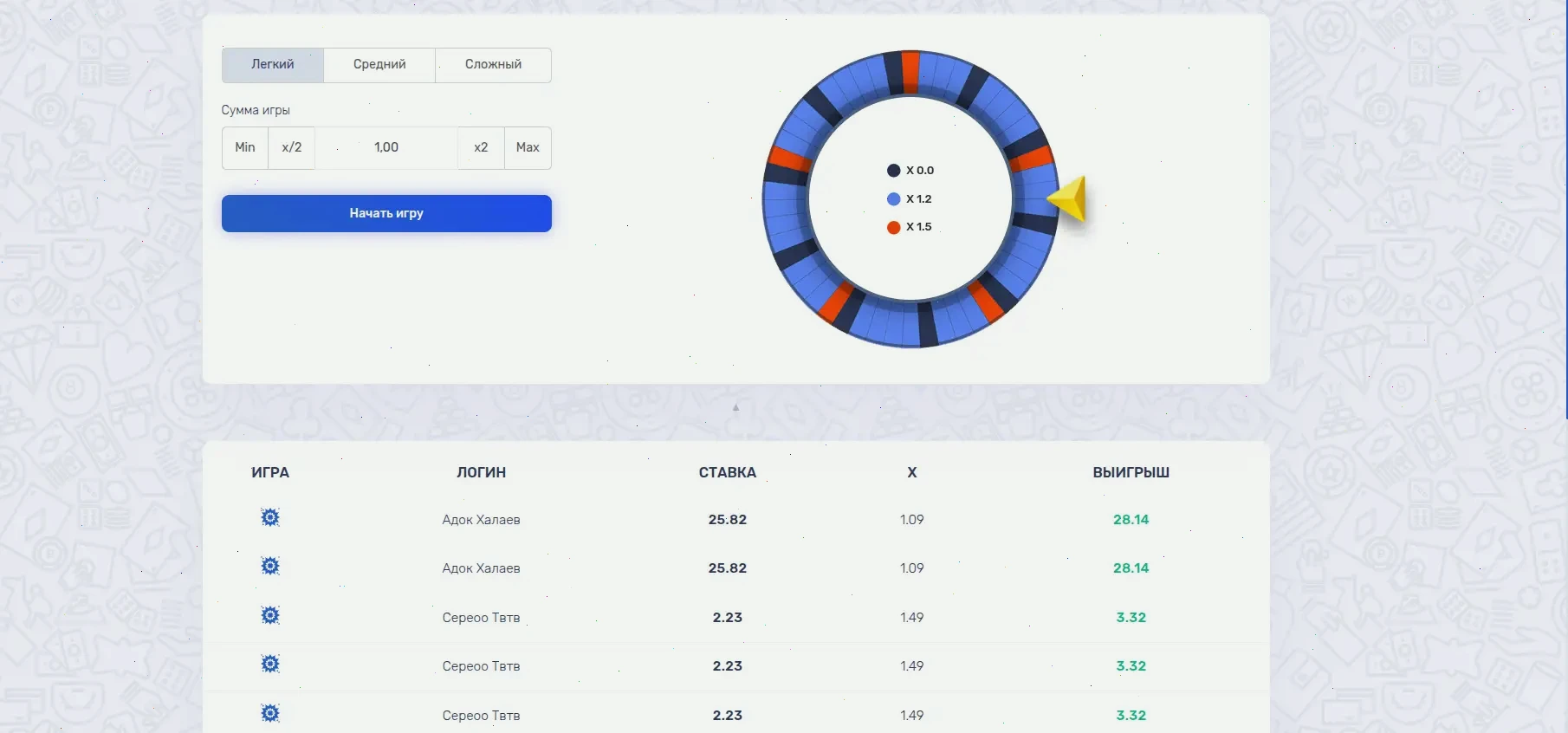

Игровые автоматы — основа казино. Тут их тысячи: классические, видео-слоты, с прогрессивными джекпотами. Провайдеры: Pragmatic Play (например, Gates of Olympus), NetEnt (Starburst), Play'n GO (Book of Dead). Есть и менее известные — Yggdrasil, Nolimit City. Демо-режим есть — можно тестить без денег. Live-казино — это рулетки, блэкджек, покер от Evolution Gaming. Крупье в реальном времени, ставки живые. Минимальные ставки — от 10 рублей. Есть и краш-игры типа Aviator — набирают популярность.

Пополнение счета и вывод средств

Пополнение — мгновенное. Методы: СБП (самый быстрый), банковские карты (Visa/Mastercard), электронные кошельки (Qiwi, WebMoney), криптовалюты (Bitcoin, USDT). Минимум — 100 рублей. Комиссий нет — зачисляется ровно та сумма, которую внесли. Вывод — дольше, до 24 часов. Но по факту, через СБП часто приходит за пару часов. Лимиты: минимум 500 рублей, максимум — 600 000 в месяц для неверифицированных, выше — после подтверждения. Проверяют документы — это стандартная процедура безопасности.

| Метод | Мин. депозит | Мин. вывод | Время вывода |

|---|---|---|---|

| СБП | 100 RUB | 500 RUB | 1-12 часов |

| Банковская карта | 100 RUB | 1000 RUB | до 24 часов |

| Bitcoin | 0.001 BTC | 0.002 BTC | до 12 часов |

| Qiwi | 100 RUB | 500 RUB | 1-6 часов |